What’s up with the “Greta Thunberg of AI”?

California’s AI regulatory bill sponsor has taken a turn toward the apocalyptic while her sister works at a Peter Thiel-funded AI company

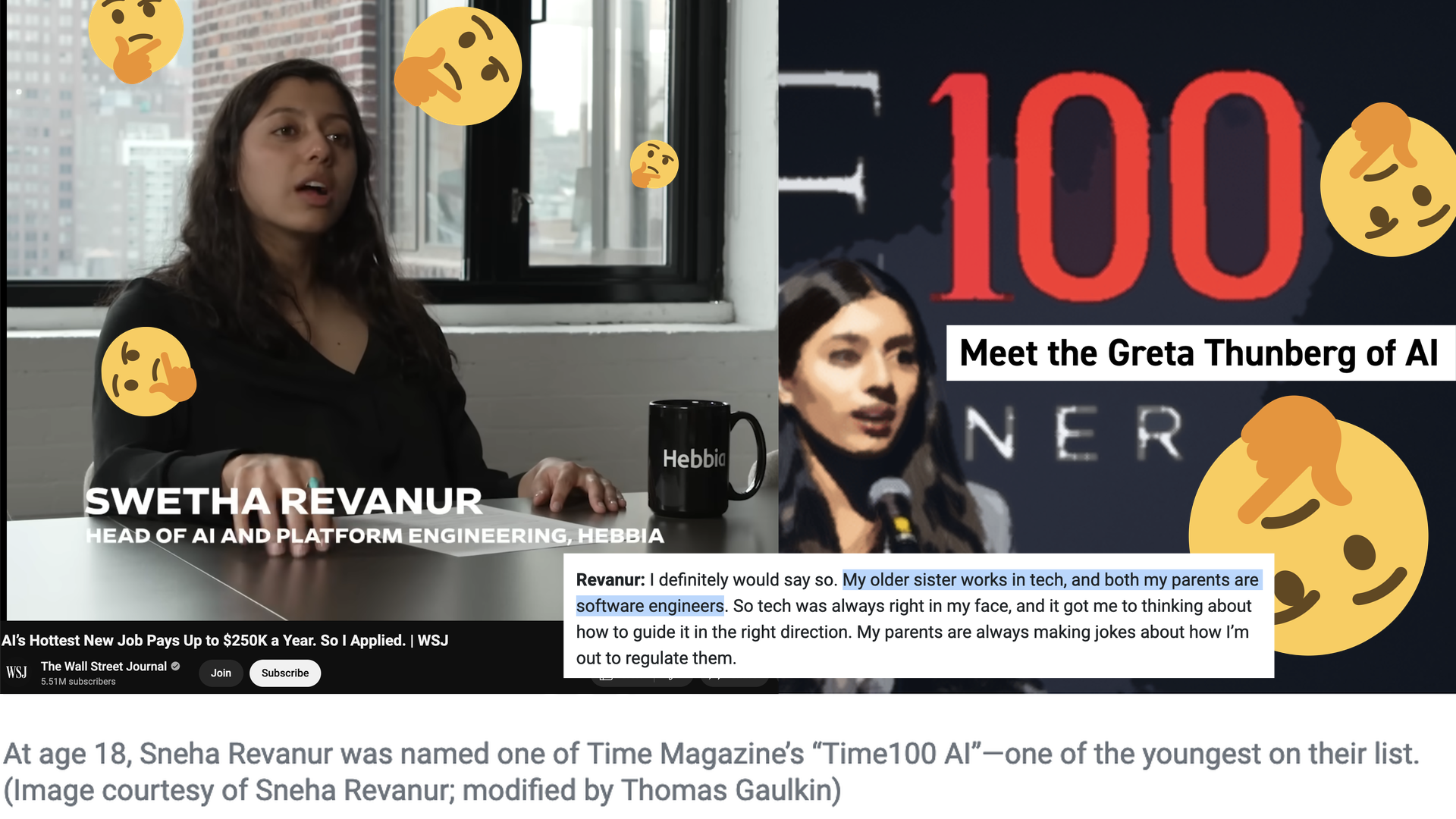

Sneha Revanur was dubbed the “Greta Thunberg of AI” in a Politico article covering her tech-related activism through her Encode Justice group[^1] with a focus on social justice issues such as her opposition to California’s Proposition 25 that would have replaced cash bail with an algorithm.

However, her activism has taken a shift towards the apocalyptic as of late. In an interview with The Bulletin of the Atomic Scientists, Revanur discussed her shift away from immediate harms of machine learning misuse and towards the completely hypothetical and recently disproven risk of a genocidal “superintelligent” artificial general intelligence (AGI) ending the human race, claiming that it could present “grave existential harms for humanity” and indicating that Encode Justice would change its focus appropriately.

Accordingly, Encode Justice published an open letter called AI 2030 calling for action from governments and companies to avoid AI harms. While the letter doesn’t openly mention existential risk, it contains references to several sources that do. These include a paper from the Center for AI Safety[^2] that claims AI could leaving humanity “displaced, second-class species” as well as as an open letter from co-authored by Encode Justice and the Future of Life Institute[^3] warning of the presumed risks of “loss of control and facilitated manufacture of WMDs” posed by machine learning models. Encode Justice and the Center for AI Safety are also co-sponsors of California’s controversial SB 1047 bill that aims to regulate “catastrophic risks” from AI.

Later in her interview with The Bulletin of the Atomic Scientists, Revanur also mentions that both her parents and sister also work in tech, though doesn’t she doesn’t mention where. Given her work, I was curious as to what specific companies they work for.

Swetha Revanur & Hebbia

After some research, I can confirm that Sneha’s sister Swetha Revanur works as the Head of Engineering for Hebbia, an AI company whose LinkedIn bills itself as a “Developer of user interfaces for Artificial General Intelligence”. Hebbia has received [250,000 prompt engineering jobs.

A blog post from the company advertises their “Matrix” software as an “Interface to AGI” that’s more powerful than other LLMs like ChatGPT while a previous version of Hebbia’s website mentions their US Air Force funding. Per LinkedIn, the company was awarded a Small Business Innovation Research contract with the Air Force for technology to automate the creation of documentation. The Outpost, Hebbia’s consulting firm that specializes in finding government contracts, described their mission as “revolutionizing data intelligence for defense applications”. This LinkedIn post went up in June 2023, a month after Politico’s profile of Sneha in which she discusses the possibility of being “more confrontational” in her AI-related activism.

It’s unclear how SB 1047 will affect Hebbia or how the legislative endorsements being sought by Encode Justice in the 2024 election will play into this, but it’s clear that there’s more going on with the Revanurs than meets the eye, especially given Google and Andreessen-Horowitz’s investment in Swetha’s work and their opposition to Sneha’s work.

In her interview with The Bulletin, Sneha said that her parents joke about her work being regulation of their jobs, but this seems to literally be the case when it comes to her sister.

[^1] Encode Justice is primarily funded by the Omidyar Network, a “social change venture” created by billionaire eBay founder Pierre Omidyar. [^2] The Center for AI Safety is one of many organizations funded by Open Philanthropy, a grantmaking group founded by Facebook co-founder Dustin Muskovitz that focuses much of its effort on hypothetical AI risk under the guise of a “longtermist” ideology that disproportionately focuses on the lives of people born in the far future over those alive today. [^3] As I’ve previously reported, Future of Life Institute co-founder and president Max Tegmark has endorsed a book authored by his anti-vaccine brother Per Shapiro that claims modern AI was predicted by “ancient teachings of the Gnostics”. He also once offered $100,000 of FLI’s money to Nya Dagbladet, the neo-Nazi news outlet her brother has written for. [^4] Beyond Andreessen-Horowitz’ investments, Marc Andreessen is a follower of the “effective accelerationist” ideology that opposes the regulation of technology on principle. Effective accelerationists are often in conflict with adherents to longtermism and effective altruism, its ideological predecessor.